Biology Inspires a New Kind of Water-Based Circuit That Could Transform Computing

News and Information

October 2, 2022 By Editor

October 1, 2022 By Editor

Considering the seismic shocks that our world has endured over the last two years alone, it seems unwise to engage in predictions about what it will look like in ten years or more.

But what we can predict with certainty is that energy consumption will remain one of humanity’s biggest concerns. And the urgency of the fight against climate change will be even more pressing than it is now.

Given these two truths, we can no longer rely on fossil fuels to drive economic growth.

Speaking of growth, experts predict the IT sector to keep booming. That’s good news, since digital technologies contribute to greater energy efficiency and sustainability. Take telepresence, for instance, which can reduce our need for travel.

Nevertheless, the sustainability challenge is so massive that we can’t afford to ignore the environmental impact of the IT infrastructure itself.

Luckily, consumers and the industry are increasingly aware of this impact. Energy efficiency is becoming a valid selling point for devices such as smartphones and laptops. And especially with the debate around the environmental cost of cryptocurrencies, nobody can claim ignorance of the potential impact of data centers on our global energy consumption.

One of the areas where awareness is comparatively lacking, is the energy cost of our wireless network infrastructure. Vendors of base stations are starting to look into the energy efficiency of their devices. But network operators are slow to consider the total energy cost of their operations.

From their viewpoint, that’s understandable. The complexity of such a consideration is substantial. And when we move beyond 5G, that complexity will only increase. The good news? Our models for assessing that impact are also becoming more sophisticated.

More base stations, or more power?

Although the details are still under discussion, it’s already clear that 6G will encompass several hardware innovations. Examples are sharing of spectrum and infrastructure, cell-free massive MIMO, and the convergence of communication and sensing. But, most importantly, 6G will require a shift to higher frequencies—above 100 GHz.

These factors will add to the evolution that has already started with 5G towards more complex network architectures. For one thing, a move to (much) higher frequencies will often mean that each base station’s range will become (much) shorter. That generally leads to a need for more base stations to ensure complete coverage at the highest capacity.

Is that bad news from the perspective of energy consumption?

The short answer is yes. As a general rule, it’s more wasteful to add base stations than to increase the output power of an existing station. There’s a straightforward reason for this: adding more base stations means decoupling shared resources such as cooling, which diminishes the overall energy efficiency.

That’s one of the reasons why massive MIMO is already a valuable addition to wireless connectivity technologies for 5G. It doesn’t increase the power consumption per base station. Meanwhile, it expands the range at the network level, and enables faster communication towards multiple users in parallel.

So is it a good idea to increase the power levels of the base stations even further to decrease the need for additional base stations? Maybe, from a purely theoretical perspective. But in the real world, obstacles pop up frequently, such as local and international EMF regulations that limit the exposure to electro-magnetic radiation.

Another real-world consideration in designing wireless networks goes beyond the number of clients within a given area. It also takes their bandwidth needs into account. We can’t forget that the bitrate also affects the power consumption of the base stations. Although 6G will be able to offer astronomical throughputs, should they be available everywhere all the time?

Models to optimize the energy efficiency of 6G networks

If we’re serious about limiting the energy use of tomorrow’s complex wireless network infrastructures, we can’t continue being content with relatively simple and theoretical models.

The challenge lies in finding the optimal balance between the energy costs of adding more base stations, and increasing the power output levels of each base station. That’s an exercise that has to be repeated for each concrete implementation. And we need to consider such factors as the physical environment, existing infrastructure, predefined installation criteria, bandwidth needs of human and non-human users, EMF guidelines, and so on.

The WAVES research group of imec at Ghent University has developed a technology- and vendor-agnostic radio access network (RAN) design tool for precisely such purposes. Creating a 3D model of the area and populating it with virtual users enables network designers to calculate the amount, locations, and power levels of base stations to ensure optimal coverage within a given area. It already supports a variety of technologies and will be continuously updated to include emerging ones such as mmWave.

The best way to boost 6G energy efficiency

The key is to use tools that can manage both the complexity of our wireless networks and that of the real world. That enables us to maximally limit energy consumption without impacting the quality of service.

These tools will help limit the chunk that wireless connectivity takes out of the world’s energy budget. But it will only take us so far. At the network level, none of the technologies considered for 6G will offer us more degrees of freedom than the ones we have now. Those are: the power levels of the base stations, their locations, and smart adaptations to changing data traffic demands.

If we want to control the energy use of our wireless networks, the heavy lifting will have to be done at the device level. By exploring new materials and architectures, we should be able to disconnect a leap in performance from a commensurate rise in energy consumption. For instance, III/V technologies do not only enable more efficient power amplifiers. They also drive optimal architectures towards a reduced number of antennas and analog components. [Read more…] about Expert discusses the power consumption of next-gen wireless networks

September 30, 2022 By Editor

September 29, 2022 By Editor

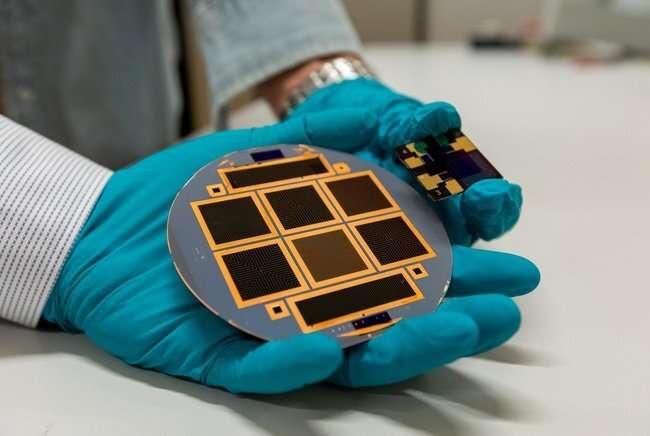

TNO, TU Eindhoven, imec and TU Delft, partners in Solliance, joined forces to further push the conversion efficiency of tandem solar cells to beyond the limits of today’s commercial photovoltaic (PV) modules. For the first time, four-terminal perovskite/silicon tandem devices with a certified top cell passed the barrier of 30%. Such high efficiency enables more power per square meter and less cost per kWh. The result is presented during the 8th World Conference on Photovoltaic Energy Conversion (WCPEC-8) in Milan, and it has been achieved by combining the emerging perovskite solar cell with conventional silicon solar cell technologies. The perovskite cell, which features transparent contacts and is part of the tandem stack, has been independently certified.

Additionally, achieving high-power density will create more opportunities to integrate these solar cells into construction and building elements, so that more existing surface area can be covered with PV modules. Breaking the 30% barrier is therefore a big step in accelerating the energy transition and improving energy security by reducing the dependency on fossil fuels.

The best of both worlds

Tandem devices can reach higher efficiencies than single junction solar cells because of a better utilization of the solar spectrum. The currently emerging tandems combine commercial silicon technology for the bottom device with perovskite technology: the latter featuring highly efficient conversion of ultraviolet and visible light and excellent transparency to near infrared light.

In four-terminal (4T) tandem devices the top and bottom cells operate independently of each other, which makes it possible to apply different bottom cells in this kind of devices. Commercial PERC technology as well as premium technologies like heterojunction or TOPCon or thin-film technology such as CIGS can be implemented in a 4T tandem device with hardly any modifications to the solar cells. Furthermore, the four-terminal architecture makes it straight forward to implement bifacial tandems to further boost the energy yield.

Researchers from the Netherlands and Belgium have successfully improved the efficiency of the semi-transparent perovskite cells up to 19.7% with an area of 3×3 mm2 as certified by ESTI (Italy). “This type of solar cell features a highly transparent back contact that allows over 93% of the near infrared light to reach the bottom device. This performance was achieved by optimizing all layers of the semi-transparent perovskite solar cells using advanced optical and electrical simulations as a guide for the experimental work in the lab,” says Dr. Mehrdad Najafi of TNO.

“The silicon device is a 20×20-mm2 wide, heterojunction solar cell featuring optimized surface passivation, transparent conductive oxides and Cu-plated front contacts for state-of-the-art carrier extraction” says Yifeng Zhao, Ph.D. student at TU Delft, whose results have been recently published in Progress in Photovoltaics: Research and Applications. The silicon device optically stacked under the perovskite contributes with 10.4% efficiency points to the total solar energy conversion.

Combined, 30.1% is the conversion efficiency of this non-area matched 4T tandem devices operating independently. This world’s best efficiency is measured according to generally accepted procedures.

Future of four terminal tandem PV modules

This is not all: Combining this highly transparent perovskite cell with other silicon-based technologies, such as back contact (metal wrap through and interdigitated back contact cells) and TOPCon solar cells, has also delivered conversion efficiencies approaching 30%. This demonstrates the potential of highly transparent perovskite solar cells and their flexibility to be combined with already commercialized technologies.

These world best efficiencies obtained on a multitude of incumbent technologies is a further milestone towards industrial deployment. “Now we know the ingredients and are able to control the layers that are needed to reach over 30% efficiency. Once combined with the scalability expertise and knowledge gathered in the past years to bring material and processes to large area, we can focus with our industrial partners to bring this technology with efficiencies beyond 30% into mass production” says Prof. Gianluca Coletti, Program Manager Tandem PV technology and application at TNO.

September 28, 2022 By Editor

When producing silicon solar cells, it is important to have a high throughput. This reduces production costs and alleviates supply bottlenecks as more photovoltaics installations are being deployed in Germany and worldwide. Headed by the Fraunhofer Institute for Solar Energy Systems ISE, a consortium of plant manufacturers, metrology companies and research institutions have come up with a proof of concept for an innovative production line with a throughput of 15,000 to 20,000 wafers per hour to respond to this need. This represents double the usual throughput and is due to improvements to several individual process steps.

This week’s eighth World Conference on Photovoltaic Energy Conversion in Milan, Italy, will see the presentation of detailed results from the research project.

“In 2021, 78% of all silicon solar cells were produced in China,” explains Dr.-Ing. Ralf Preu, Division Director of PV Production Technology at Fraunhofer ISE. “In order to deploy more solar installations as quickly as possible and to make our supply chains more robust, Europe should re-establish its own production centers for high-efficiency solar cells. By boosting throughput and making production technology more resource-efficient, we can cut costs considerably and unlock sustainability potential that we will be able to leverage thanks to process knowledge and engineering excellence.”

New concepts for silicon solar cell production

The consortium investigated every stage of the production of high-efficiency silicon solar cells to optimize the entire process. Several process steps required new developments. “For some processes, established production workflows needed to be accelerated, other processes needed to be reinvented from scratch,” explains Dr. Florian Clement, project manager at Fraunhofer ISE. “Compared to the numbers we currently see, the production systems developed within the scope of the project achieve at least double the throughput.”

One of the new developments saw the researchers implement new on-the-fly laser equipment which continually processes the wafers as they move at high speed under the laser scanner. For the metallization of solar cells, the consortium introduced rotary screen printing instead of the current standard process, flatbed screen printing.

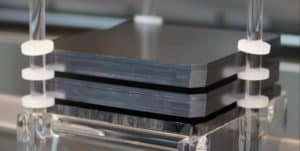

Stack diffusion and oxidization

Solar cells require differently doped sections, for example where silicon layer and metal contacts meet. The Fraunhofer ISE researchers integrated the diffusion process used in this context and the thermal oxidization of the wafers into one process step.

Wafers are no longer placed individually but stacked on top of each other to be processed in the furnace. As a result, the oxidization process creates the final doping profile and achieves surface passivation at the same time increasing the throughput of the process by a factor of 2.4.

Faster inline furnace processes

Following the electrode imprint on the solar cells, the contact of the electrodes to the silicon solar cell is formed on both sides in inline furnaces. Standard furnaces would have required a significantly larger heating chamber to increase throughput at this stage.

Instead, the project consortium installed a three times faster belt speed in the furnace and compared the quality of the sintered solar cells with today’s standard. They were able to significantly increase throughput while not compromising the efficiency of the solar cells.

Contactless testing and analysis of defects

For the characterization of the complete solar cells, the consortium devised two concepts. A contactless method and a method using sliding contacts were implemented to enable future production lines to test cells faster.

This makes it possible to keep up a continuous speed of 1.9 meters per second while measuring the cells, with the team demonstrating great measurement accuracy for both concepts. A patent has been filed for the contactless method.

September 27, 2022 By Editor

Scientists estimate that more than 95 percent of Earth’s oceans have never been observed, which means we have seen less of our planet’s ocean than we have the far side of the moon or the surface of Mars.

The high cost of powering an underwater camera for a long time, by tethering it to a research vessel or sending a ship to recharge its batteries, is a steep challenge preventing widespread undersea exploration.

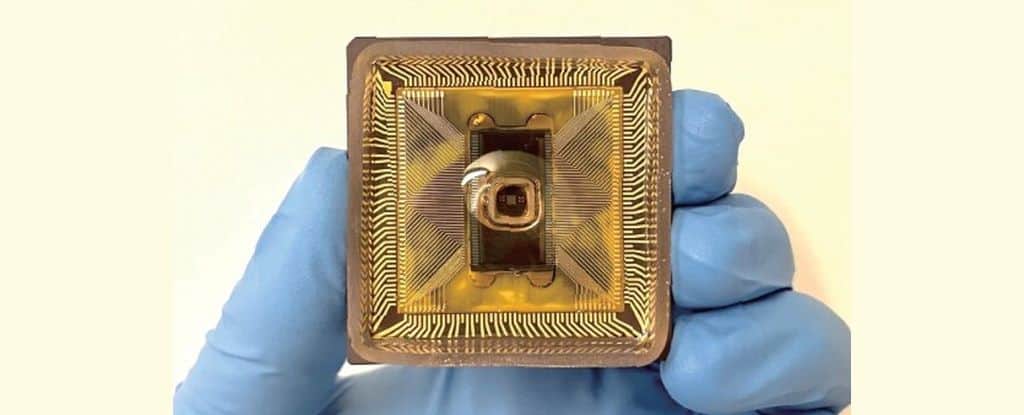

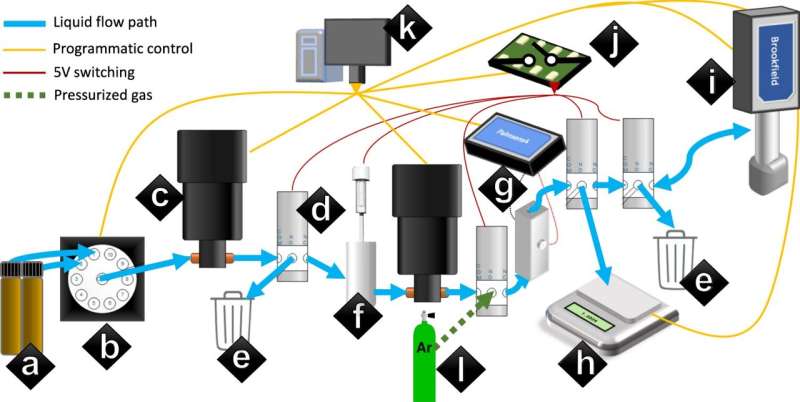

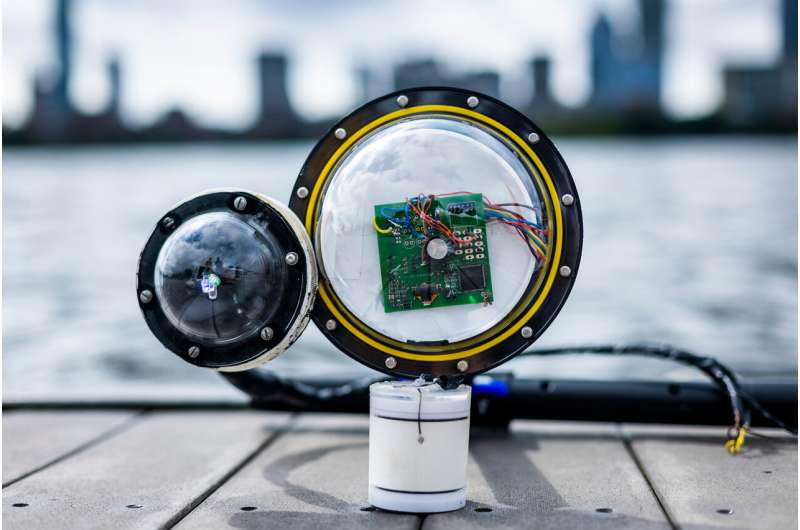

MIT researchers have taken a major step to overcome this problem by developing a battery-free, wireless underwater camera that is about 100,000 times more energy-efficient than other undersea cameras. The device takes color photos, even in dark underwater environments, and transmits image data wirelessly through the water.

The autonomous camera is powered by sound. It converts mechanical energy from sound waves traveling through water into electrical energy that powers its imaging and communications equipment. After capturing and encoding image data, the camera also uses sound waves to transmit data to a receiver that reconstructs the image.

Because it doesn’t need a power source, the camera could run for weeks on end before retrieval, enabling scientists to search remote parts of the ocean for new species. It could also be used to capture images of ocean pollution or monitor the health and growth of fish raised in aquaculture farms.

“One of the most exciting applications of this camera for me personally is in the context of climate monitoring. We are building climate models, but we are missing data from over 95 percent of the ocean. This technology could help us build more accurate climate models and better understand how climate change impacts the underwater world,” says Fadel Adib, associate professor in the Department of Electrical Engineering and Computer Science and director of the Signal Kinetics group in the MIT Media Lab, and senior author of the paper.

Joining Adib on the paper are co-lead authors and Signal Kinetics group research assistants Sayed Saad Afzal, Waleed Akbar, and Osvy Rodriguez, as well as research scientist Unsoo Ha, and former group researchers Mario Doumet and Reza Ghaffarivardavagh. The paper is published in Nature Communications.

Going battery-free

To build a camera that could operate autonomously for long periods, the researchers needed a device that could harvest energy underwater on its own while consuming very little power.

The camera acquires energy using transducers made from piezoelectric materials that are placed around its exterior. Piezoelectric materials produce an electric signal when a mechanical force is applied to them. When a sound wave traveling through the water hits the transducers, they vibrate and convert that mechanical energy into electrical energy.

Those sound waves could come from any source, like a passing ship or marine life. The camera stores harvested energy until it has built up enough to power the electronics that take photos and communicate data.

To keep power consumption as a low as possible, the researchers used off-the-shelf, ultra-low-power imaging sensors. But these sensors only capture grayscale images. And since most underwater environments lack a light source, they needed to develop a low-power flash, too.

“We were trying to minimize the hardware as much as possible, and that creates new constraints on how to build the system, send information, and perform image reconstruction. It took a fair amount of creativity to figure out how to do this,” Adib says.

They solved both problems simultaneously using red, green, and blue LEDs. When the camera captures an image, it shines a red LED and then uses image sensors to take the photo. It repeats the same process with green and blue LEDs.

Even though the image looks black and white, the red, green, and blue colored light is reflected in the white part of each photo, Akbar explains. When the image data are combined in post-processing, the color image can be reconstructed.

“When we were kids in art class, we were taught that we could make all colors using three basic colors. The same rules follow for color images we see on our computers. We just need red, green, and blue—these three channels—to construct color images,” he says.

Sending data with sound

Once image data are captured, they are encoded as bits (1s and 0s) and sent to a receiver one bit at a time using a process called underwater backscatter. The receiver transmits sound waves through the water to the camera, which acts as a mirror to reflect those waves. The camera either reflects a wave back to the receiver or changes its mirror to an absorber so that it does not reflect back.

A hydrophone next to the transmitter senses if a signal is reflected back from the camera. If it receives a signal, that is a bit-1, and if there is no signal, that is a bit-0. The system uses this binary information to reconstruct and post-process the image.

“This whole process, since it just requires a single switch to convert the device from a nonreflective state to a reflective state, consumes five orders of magnitude less power than typical underwater communications systems,” Afzal says.

The researchers tested the camera in several underwater environments. In one, they captured color images of plastic bottles floating in a New Hampshire pond. They were also able to take such high-quality photos of an African starfish that tiny tubercles along its arms were clearly visible. The device was also effective at repeatedly imaging the underwater plant Aponogeton ulvaceus in a dark environment over the course of a week to monitor its growth.

Now that they have demonstrated a working prototype, the researchers plan to enhance the device so it is practical for deployment in real-world settings. They want to increase the camera’s memory so it could capture photos in real-time, stream images, or even shoot underwater video.

They also want to extend the camera’s range. They successfully transmitted data 40 meters from the receiver, but pushing that range wider would enable the camera to be used in more underwater settings.